Nov-2019

Digitalisation is only scratching the surface

Digitalisation usually means upgrading a few elements of technology or workflows, leaving a partial digital twin when a holistic approach is needed.

ANDREW MCINTEE and ARJUN BALAKRISHNAN

KBC (A Yokogawa Company)

Viewed : 2883

Article Summary

The refining industry is constantly searching for profitability typically found by squeezing a little more production or improving the way things are done from existing assets. Low hanging fruit has largely been picked, and trying to improve further with current methods is difficult and costly in the industry’s prevailing compartmentalised organisation. With digital transformation, we can move beyond doing the same things better and eliminate this ‘silo’ bias. Digitalisation allows every bit of ‘change what’s going on faster’ to be improved; every element is enhanced, and profitability benefits are magnified.

Profitability is the goal, and specifically for the industry this means improvements in value in the following areas:

• Reduced investment cost

• Reduced operating cost

• Optimum production for the current market conditions

• Balancing asset maintenance cost and availability

• Focused employee support

• Reduced risk.

This value is being sought constantly. Internal departments, technology and service companies, and contractors all are tasked with the goal of improving one or more of the above value points. Existing biases, lack of priority, capital constraints, and business justification all prevent full value being captured at one time or another. All too often, solutions are broken down and applied to only the one area that helps the key stakeholder most. At best, other areas and stakeholders get some reflected value; at worst, the value created for one is to the detriment of another. This issue is not a unique feature of the oil and gas industry; all businesses suffer similar issues. However, we can see where digitalisation has helped some businesses create value and benefit all areas through a holistic solution. Sometimes this has been completely disruptive; at other times it greatly enhances what companies are already doing well.

The oil and gas industry has seen some effort made in digitalisation, but not to the extent of other industries, and the legacy technology that is firmly established is limiting opportunities for innovation. One reason why is that other industries have reasonably linear work processes, where steps follow one another in a well-defined path. The oil and gas industry is different – it has very complex iterating work processes across many areas. For instance, in the optimisation of downstream feed networks and production facilities, there are a great many interactions between strategy, planning, scheduling, process engineers, reliability specialists, unit licensors, utility engineers, and asset suppliers, to name but a few (see Figure 1). The final design is a myriad of trade-offs, assumptions and assumed overall best value that in some cases has taken many years to manifest across workflows. Digitalisation will enable technology to give deeper integration of the current engineering silos, quicken iterative workflows, simplify the complex, and deliver huge value in optimisation across all stakeholders.

Finding out what’s going on

The current problems are well known throughout the industry and everyone has an opinion of what the most pressing and solvable problem is at the time. Personal expertise, experience, trusted data sources, and available tools shape only the information available for individual groups. It is well recognised that this is not ideal, and regular meetings are set, in both an operations environment or in project design, to update others on the data and information each group has.

The transfer of information is intended to form more data for a group to make a further, more informed judgement. In the case where data is not transferred or badly transferred, assumptions need to be made, and time is spent judging the worth of the assumptions as well as rectifying them once the assumption is proved wrong. The cost of all this is time.

There are really two steps when digitalisation enables better outcomes. First, data is ready and secondly data is connected. This creates a single source of the truth that is available to all stakeholders and is constantly updated in real time.

To counter this in non-digital workflows, common thinking is to share more data. But adding more data without it being reconciled and understood, what it is, where it fits, and how it connects is just as bad. You may have the best tools, the most experience and the key expertise, but with data: ‘garbage in, garbage out’ is often the outcome. Before being ready to use data to make decisions or data that is being fed to software applications, a process needs to be in place to systematically assure its quality. It will cost a little more to sort it early on, but that is better than blindly trusting the data and needing to rectify your actions later. Only then is it fair to declare it as a data store (the ‘data lake’) and the system of record.

Once the data process is established, the following questions can be asked:

• Data sufficiency – do you collect all the necessary values?

• Data trust – are instruments of adequate accuracy and reliability for their intended purpose?

• Data propagation – when a change is made in the field, how does the system of record know about it?

• Data governance – is there an ownership record for each data point?

Individual departments have many data sources, often on locked-down systems or hidden away in obscurely named folders. Connectivity covers the methods and tools needed to make this data accessible to all users and systems. This can run from physical infrastructure to cyber security, but for engineering departments this means simulation tools and physics models.

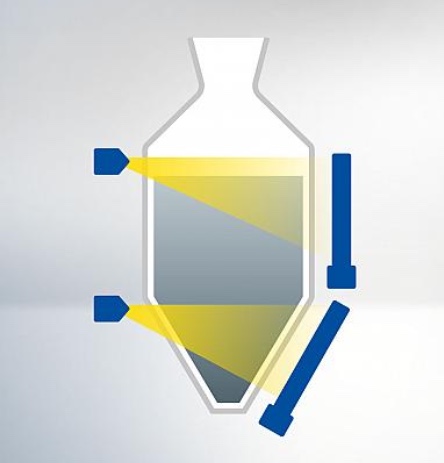

Currently, throughout the design stages, many hundreds of simulation models are created. There are typically many different revisions as a project progresses, or a whole new model as it assimilates another department’s output. With digitalisation, there is a single model that is set up as a digital twin, constantly synchronised with the system of records via always up-to-date asset models of the plant (see Figure 2).

A digital twin is relevant beyond the engineering department. It is a decision support tool that enables improved safety, reliability and profitability in design and operations. It is a virtual digital copy of a device, system or process that accurately mimics actual performance in real time, that is executable and can be manipulated, allowing a better outcome to be developed. The model develops as the asset develops and more history of both is attained.

The core of a digital twin is the simulation model, intrinsically integrated with the required thermodynamics, data system of records and detailed analytical tools, such as pipeline network or gas turbine power models. Connecting all the best tools, such that they run and update automatically, will massively reduce the time for iterating information and the time spent in update meetings or costly remedial fixes.

Making the change

Breaking each step down into the questions that need to be asked when considering change can make that change easier: why and when to change, what to change to, and how to change.

Add your rating:

Current Rating: 4